Recently, I blogged about an exciting new exam for Dynamics 365 and Power Platform developers. Off the back of this, I wanted to do another series of posts providing revision notes, to help support others who may be sitting the MB-400 exam in future. Similar to my previous series all around the Power BI Exam 70-778, each post will break down the list of skills measured, focusing on the essential details regarding each topic.

This post and all subsequent ones aim to provide a broad outline of the core areas to keep in mind when tackling the exam, linked to appropriate resources for more focused study. Your revision should, ideally, involve a high degree of hands-on testing and familiarity in working with the platform.

Without much further ado, let’s jump into the first topic area of the exam - how to Create a Technical Design, which has a 10-15% weighting and covers the following topics:

Validate requirements and design technical architecture

- design and validate technical architecture

- design authentication and authorization strategy

- determine whether requirements can be met with out-of-the-box functionality

- determine when to use Logic Apps vs. Microsoft Flow

- determine when to use serverless computing vs. plug-ins

- determine when to build a virtual entity data source provider vs. when to use connectors

Create a data model

- design a data model

Power Platform Technical Architecture

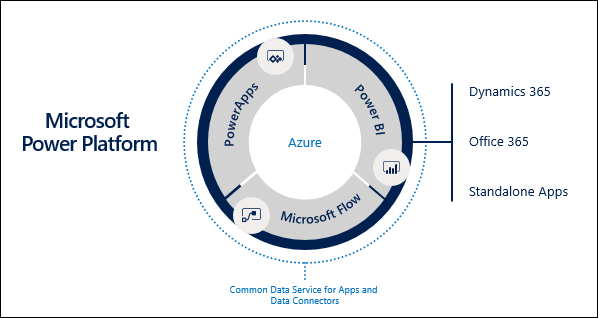

The application previously referred to as Dynamics CRM and now, broadly as Dynamics 365, has morphed and changed considerably from a customer relationship management system to a platform of independent, yet co-dependent applications, namely:

- Power Apps (previously known as PowerApps): These come in two flavours. Traditional Dynamics CRM developers will be most familiar with model-driven apps, which utilises much of the same customisation experience traditionally offered within Dynamics CRM. These type of apps are best suited for applications that are more data-driven and need to be run via a desktop web browser, although they do come with full support for mobile devices. Canvas apps, in comparison, are geared towards mobile-first scenarios, providing app developers with a high degree of freedom in designing their apps and deploying them to a wide variety of different devices or alongside other applications within the Power Platform.

- Power BI: A next-generation Business Intelligence (BI) tool, Power BI provides impressive data modelling, visualisation and deployment capabilities, that enable organisations to better understand data from their various business systems. Despite having its own set of tools and languages, traditional Excel power users should have little difficulty getting to grips with Power BI, thereby allowing them to migrate existing Excel-based reports across with ease.

- Power Automate (previously known as Microsoft Flow): As a tool designed to automate various business processes, Power Automate flows can trigger specific activities based on events from almost any application system. With near feature-parity with traditional Dynamics CRM workflows, Power Automate is a modern and flexible tool to meet various integration requirements.

- The Common Data Service (CDS): Adapted from the existing database platform utilised by Dynamics CRM, the CDS provides a “no-code” environment to create entities (i.e. Objects that store data records), develop forms, views and business rules, to name but a few. Within CDS, Microsoft has standardised the various entities within this to align with The Common Data Model, an open-source initiative that seeks to provide a standard definition of commonly used business entities, such as Account or Contact.

The diagram below lazily stolen lovingly recycled from Microsoft illustrates all of these various applications and how they work together with other Microsoft services you may be familiar with:

Understanding how these applications can work in tandem is critical when building out an all-encompassing business application. The examples below provide a flavour of how these applications can work together, but the full list would likely cover several pages:

- Including a Power Automate flow as part of a CDS solution, allowing you then deploy this out to multiple environments with ease.

- Being able to embed a Power BI tile or Dashboard with a personal dashboard setup in a model-driven app.

- Embedding a canvas-driven app into Power BI, allowing users to update data in real-time.

Handling Security & Authentication

Ensuring that critical business data is subject to reasonable and, where appropriate, elevated access privileges is typically an essential requirement as part of any central business system. The key benefit that the Power Platform brings to the table in this regard is that it uses one of the best identity management platforms available today - Azure Active Directory (AAD). Some of the benefits that AAD can bring to the table include:

- Providing a true single sign-on (SSO) experience across multiple 1st/3rd party applications, backed up by robust administrator controls and auditing capabilities.

- Allowing full support for user principal or security group level controls, via role-based access controls (RBAC).

- Access to a wide range of various security-minded features, such as Multi-Factor Authentication (MFA), risky sign-in controls and automatic password reset capabilities, should a user account or its associated password be detected as a potential risk.

When it comes to managing security or access within your various Power Platform components, this will differ, based on which application you are working with:

- For model-driven apps and CDS, you can leverage the existing capabilities within Dynamics CRM, such as Business Units or Security Roles, to provide a structured, hierarchical security model. This functionality can be extended further, via features such as field security profiles, thereby allowing you to secure specific entity fields in a variety of different ways.

- Security for canvas apps will typically be enforced via the data source you are connecting with - for example, users connecting to the Common Data Service will have any security role privileges applied automatically. For other applications, you may need to consult the relevant documentation and ensure, where possible, you are using SSO to simplify this process. Canvas app developers can share their applications to any other user on the tenant. Still, it’s important to emphasise what impact this will have with any associated app resources.

- Power Automate flows follow similar sharing principles to canvas apps, allowing you to create team flows that others in the organisation can interact with. Security for 3rd party applications is largely dictated in much the same manner as canvas apps.

- Finally, Power BI includes several features to help you manage access, such as Workspaces, Apps or by simply sharing your report/dashboard to another user. Most of these features are only available as part of a paid subscription. Again, the security/privileges of any underlying data source depends upon the account used to authenticate with the underlying data. For stringent scenarios, you may need to resort to Row-level security (RLS) to ensure data is restricted accordingly.

Typically, a developer will want to design any application to use CDS as the underlying data source for the solution, as the security and record restriction features afforded here will more than likely be suitable for most situations.

Comparing Logic Apps to Microsoft Power Automate Flows

Confusion can arise when figuring out what Azure Logic Apps are and how they relate to Power Automate. That’s because they are almost precisely the same; Power Automate uses Azure Logic Apps underneath the hood and, as such, contains most of the same functionality. Determining the best situation to use one over the other can, therefore, be a bit of a challenge. The list below summarises the pro/cons of each tool:

- Azure Logic Apps

- Enterprise-grade authoring, integration and development capabilities.

- Full support for Azure DevOps or Git source control integration.

- “Pay-as-you-go” - only pay for if and when your Logic App executes.

- Cannot be included in solutions.

- Must be managed separately in Microsoft Azure.

- Does not support Office 365 data loss prevention (DLP) policies

- Target Audience: Developers who are familiar with dissecting structured JSON definitions

- Power Automate

- Easy-to-use development experience

- Can be included within solutions and trigger based on specific events within CDS

- Supports the same connectors provided within Azure Logic Apps

- Difficult to configure alongside complex Application Lifecycle Management (ALM) processes.

- Fixed monthly subscription, with quotas/limits - may be more expensive compared to Logic Apps.

- Must be developed using the browser/mobile app - no option to modify underlying code definition.

- Target Audience: Office 365 power users or low/no-code developers

In short, you should always start with Power Automate flows in the first instance. Consider migrating across to Logic Apps if your solution grows in complexity, your flow executes hundreds of time per hour, or you need to look at implementing more stringent ALM processes as part of your development cycles. Fortunately, Microsoft makes it really easy to migrate your Power Automate flows to a Logic Apps.

Comparing Serverless Computing to Plug-ins

Serverless is one of those buzz words that gets thrown around a lot these days 🙂 But it is something worth considering, particularly in the context of Dynamics 365 / the Power Platform. With the recent changes around API limits as well, it also makes serverless computing - via the use of the Azure Service Bus - a potentially desirable option to reduce the number of API calls you are making within the application. The list below summarises the pro/cons of each route:

- Serverless Compute

- Allows developers to build solutions using familiar tools, but leveraging the benefits of Azure.

- Not subject to any sandbox limitations for code execution.

- Not ideal when working with non-Azure based services/endpoints.

- Additional setup and code modifications required to implement.

- No guarantee of the order of execution for asynchronous plug-ins.

- Plug-ins

- Traditional, well-tested functionality, with excellent samples available.

- Works natively for both online/on-premise Dynamics 365 deployments.

- Reduces technical complexity of any solution, by ensuring it remains solely within Dynamics 365 / the CDS.

- Full exposure to any Dynamics 365/CDS transaction.

- Impractical for long-running transactions/code.

- Not scalable and subject to any platform performance/API restrictions.

- Restricts your ability to integrate with separate, line-of-business (LOB) applications.

Comparing Virtual Entities to Connectors

The core idea of having a system like Dynamics 365 or the CDS in place is to reduce the number of separate systems within an organisation and, therefore, any complex integration requirements. Unfortunately, this endeavour usually fails in practice and, as system developers, we must, therefore, contemplate two routes to bringing data into Dynamics 365/CDS:

- Virtual Entities: Available now for several years, this feature allows developers to “load” external data sources in their entirety and work with them as standard entities within a model-driven app. Provided that this external data source is accessible via an OData v4 endpoint, it can be hooked up to without issue. The critical restriction around this functionality is that all data will be in a read-only state once retrieved; it is, therefore, impossible to create, update or remove any records loaded within a virtual entity.

- Connectors (AKA Data Flows): A newer experience, available from within the PowerApps portal, this feature leverages the full capabilities provided by Power Query (M) to allow you to bring in data from a multitude of different sources. As part of this, developers can choose to create entities automatically, map data to existing entities and specify whether to import the data once or continually refresh it in the background. Because any records loaded are stored within a proper entity, there are no restrictions when it comes to working with the data. However, this route does require additional setup and familiarity with Power Query and is not bi-directional (i.e. any changes to records imported from SQL Server will not synchronise back across).

Ultimately, the question you should ask yourself when determining which option to use is, Do I need the ability to create, update or delete records from my external system? If the answer is No, then consider using Virtual Entities.

Data Model Design Fundamentals

A common pitfall for any software developer working with applications, like Dynamics 365, is to miss the bleeding obvious; namely, ignoring the wide variety of entities and features available to satisfy any required business logic, such as Business Rules or Power Automate flows. When designing and implementing any bespoke data model using the CDS, you should:

- Carefully review the entire list of entities listed as part of the Common Data Model. Determine, as part of this, whether an existing entity is available that captures all of the information types you need, can be customised to include additional, minor details or whether a brand new entity will be necessary instead.

- Consider the different types of Entity Ownership options and how this relates to your security model. For example, if you need the ability to restrict records to specific users or business unit, ensure that you configure the entity for User or team owned entity ownership. More details on these options can be found here.

- Review the differences between a standard and an Activity entity, and choose the correct option, based on your requirements. For example, when setting up an entity recording individual WhatsApp messages to customers, use the Activity entity type.

- Digest and fully understand the list of different field types available for creation. Ensure as part of this that you select the most appropriate data types for any new fields and factor in any potential reporting requirements as part of this.

- Understand the fundamental concepts around entity relationships. You should be able to tell the differences between 1:N and N:N relationships, including the differences between native and manual N:N relationships.

- Be familiar with using tools such as Microsoft Visio and, in particular, crow’s foot notation diagrams, to help plan and visualise your proposed data model.

These tips provide just a flavour of some the things to consider when designing your Dynamics 365 / CDS data model. Future posts will dive into technical topics relating to this, which should ultimately factor back into your thinking when architecting a solution.

Hopefully, this first post has familiarised yourself with some of the core concepts around extending Dynamics 365 and Power Platform. I’ll be back soon with another post, which will take a look at some of the customisation topics you need to be aware of for Exam MB-400.