Welcome to the fifteenth and penultimate post in my series focused on providing a set of revision notes for the MB-400: Microsoft Power Apps + Dynamics 365 Developer exam. Previously, we examined what events are and how they can provide a streamlined mechanism for executing complex business logic outside of Dynamics 365 online / the Common Data Service. In today’s post, we conclude our review of the Develop Integrations area of the exam, by taking a look at our final exam topic - Implement data synchronisation. For this, Microsoft expects candidates to demonstrate knowledge of the following:

Implement data synchronization

- configure and use entity change tracking

- configure the data export service to integrate with Azure SQL Database

- create and use alternate keys

For a long time now, Microsoft has provided tools that can perform simple or complex integrations involving data that resides within the Common Data Service database. In some situations, the out of the box capabilities provided to us can negate the need to implement solutions to track data changes, lookup entity records based on alternative identifiers or create replica versions of our data within a new environment. In discussing the topics listed above today, we will see how these features can achieve these objectives and more.

As with all posts in this series, the aim is to provide a broad outline of the core areas to keep in mind when tackling the exam, linked to appropriate resources for more focused study. Your revision should, ideally, involve a high degree of hands-on testing and familiarity in working with the platform if you want to do well in this exam. As we will also be covering topics such as Microsoft Azure, Azure SQL and Azure Key Vault as well, having some awareness of how to work with these tools is recommended as well.

Keeping Cloud Systems Synchronised: Key Challenges and How the Data Export Service Can Help

Although the great benefit of adopting a business applications platform like Dynamics 365 or the Power Platform is that you can significantly simplify the types of technical integrations you need to perform, there will always be situations that necessitate some form of a bespoke integration. No organisation is in the “perfect” position in having all of their core applications hosted with the same vendor or even within the cloud. As a consequence, we then have to build highly specific integrations, that may involve standing up a holding/staging database. This option can introduce several benefits, by allowing us to run complex Structured Query Language (SQL) Data Manipulation Language (DML) statements targeting our data or allow us to audit changes as they are processed. However, the main drawback of this approach is that we must not only spend time setting up this database, but also write secure and scalable code to pull data from our Dynamics 365 / Common Data Service environment.

To help overcome these challenges, Microsoft makes available the Data Export Service Managed Solution, which allows us to straightforwardly synchronise our Dynamics 365 Online / Common Data Service database with a self-hosted SQL database on Azure. All of this is configurable through the interface of the application, reducing the need to leverage a heap of custom code to accomplish what may be a simplistic integration. Using the tool, developers can:

- Synchronise a variety of entities, using custom schema/table prefixes (if needed).

- Export out data for native many-to-many (N:N) relationships.

- Provide near-real-time synchronisation capabilities, that processes delta changes within 5-10 minute intervals.

- Scale and control the performance of the integration directly from within Azure.

- Access numerous options for inspecting synchronisation errors and scheduling records for re-synchronisation.

- Incorporate any bespoke application alongside the Data Export Service by integrating alongside the exposed API endpoint.

The Data Export Service can also have other uses outside of integrations. It could be that you need to assure the business that you are backing up core business data regularly to a separate geographic region. Or, it could be that you wish to leverage complex Transact-SQL (T-SQL) queries as part of your reporting solution, that is impossible to replicate via FetchXML. In these contexts, the Data Export Service becomes an attractive option to consider.

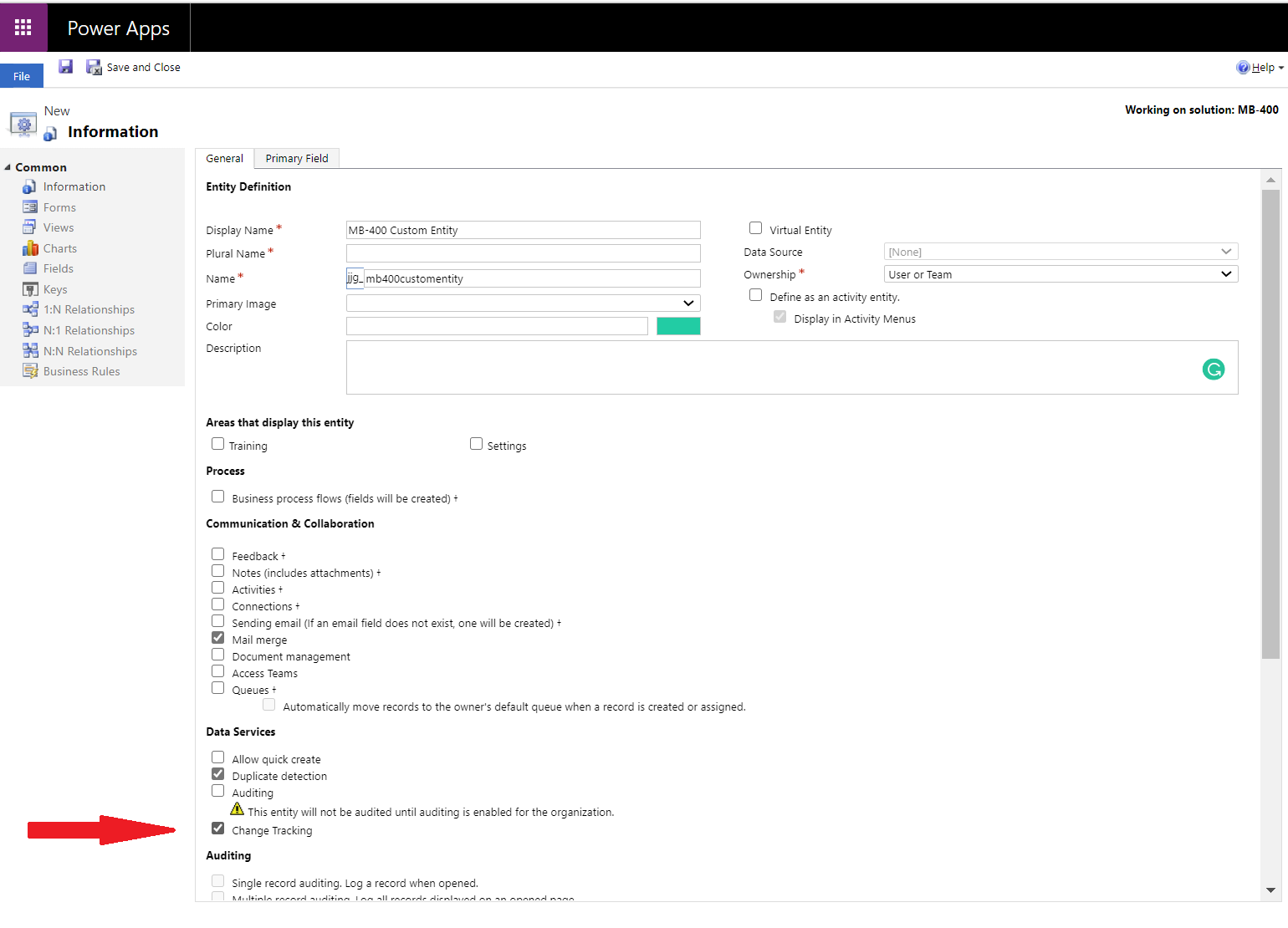

To get started with using the Data Export service, you need to ensure you have a few things set up already within Microsoft Azure. You can consult the relevant Microsoft Docs article to find out more. To summarise though, you will need to have a valid Azure SQL / SQL Server instance on a Virtual Machine that is ready to connect to and appropriate access at the subscription level to create new resources. You will also need to ensure you have enabled change tracking capability for each entity you wish to synchronise out with the service, by navigating into the classic interface and ticking the appropriate option on the Entity configuration page:

Change Tracking is most relevant in the context of the Data Export Service and, for the exam itself, the above is all you likely need to content yourself with as part of your revision. However, it may be useful to review how developers can use the Web API to retrieve changes for an entity enabled for Change Tracking and also leverage it using the SDK and, specifically, via the RetrieveEntityChangesRequest class.

Demo: Deploying & Configuring the Dynamics 365 Data Export Service

Working through all of the required steps to successfully configure the Data Export Service can be a little daunting. With this in mind, check out the video below, where I take you through each step:

You can find a copy of the PowerShell script I use on the Microsoft Docs site.

Alternate Keys: What are they, and why should I use them?

Typically, when you are integrating alongside an external system, it will contain unique record identifiers that differ from any the globally unique identifier (GUID) for each Common Data Service record. It could be that this record identifier is an integer or string value of some kind, or it could be a mixture of different attributes (e.g. using both the address and name of the customer, we can tell whether the record is unique or not). Due to the myriad of different systems available not just through Microsoft, but other vendors too, it can be a challenge to find a straightforward solution to handle this.

Enter stage left alternate keys, a useful feature we can enable on one or several attributes on an entity. By creating them, you are in effect informing Dynamics 365 Online / the Common Data Service that the contents of this field must always be unique within the system. After an alternate key is defined, the application will create a unique index in the database, covering the attributes you specify. Therefore, the hidden benefit of this is that queries targeting alternate key values will always run faster. At the time of writing this post, it is possible to use both the Power Apps maker portal and classic interface to create alternate keys; where possible, I would advise you to use the maker portal. An additional benefit with alternate keys is that the SDK supports out several capabilities that developers can easily leverage. For example, it’s possible to create new records by specifying its alternate key value and execute Web API Retrieve requests using one or multiple Alternate Key values.

Keep in mind some of the limitations concerning alternate keys:

- The platform enforces some limitations not only on the size of the alternate key (in bytes) but also on whether certain illegal Unicode characters exist in the field value. If any attribute value breaks these rules, errors will likely occur.

- You can only have a maximum of five per entity.

- The application relies on a system job to create the appropriate index for each newly defined alternate key. The processing time for this job can take a considerable amount of time to complete, depending on the size of your database. You can navigate into the classic interface at any time to track progress and also evaluate whether any errors have occurred.

- You are limited to the following data types when defining an attribute as an alternate key:

- Date and Time

- Decimal

- Lookup

- Option Set

- Single Line of Text

- Whole Number

And that’s a wrap…

…you might expect me to say at this stage. True enough, we’ve now covered all of the content within the MB-400 exam. What I wanted to do in next week’s post though is perform a round-up post that collects together all of the posts/videos published during the series, as well as sharing some general advice relating to the exam. Catch you again next week! 🙂