The introduction of Azure Data Factory V2 represents the most opportune moment for data integration specialists to start investing their time in the product. Version 1 (V1) of the tool, which I started taking a look at last year, missed a lot of critical functionality that - in most typical circumstances - I could do in a matter of minutes via a SQL Server Integration Services (SSIS) DTSX package. The product had, to quote some specific examples:

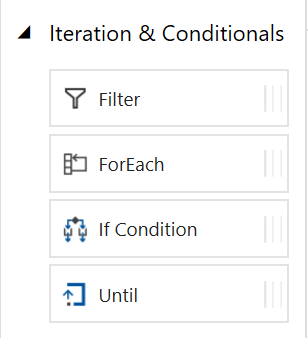

- No support for control low logic (foreach loops, if statements etc.)

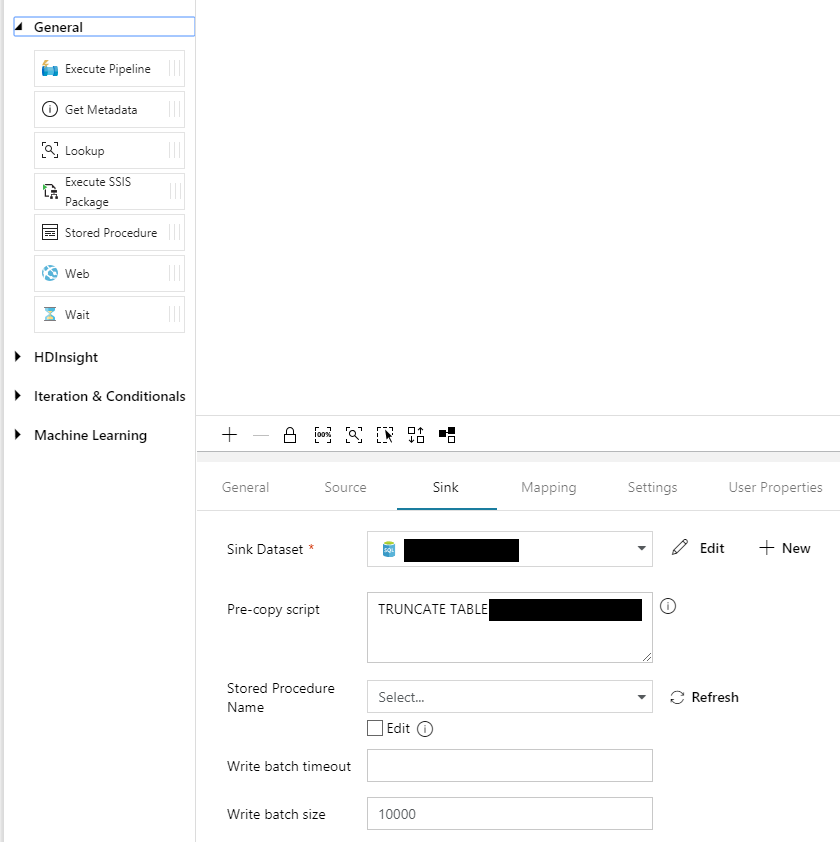

- Support for only “basic” data movement activities, with a minimal capability to perform or call data transformation activities from within SQL Server.

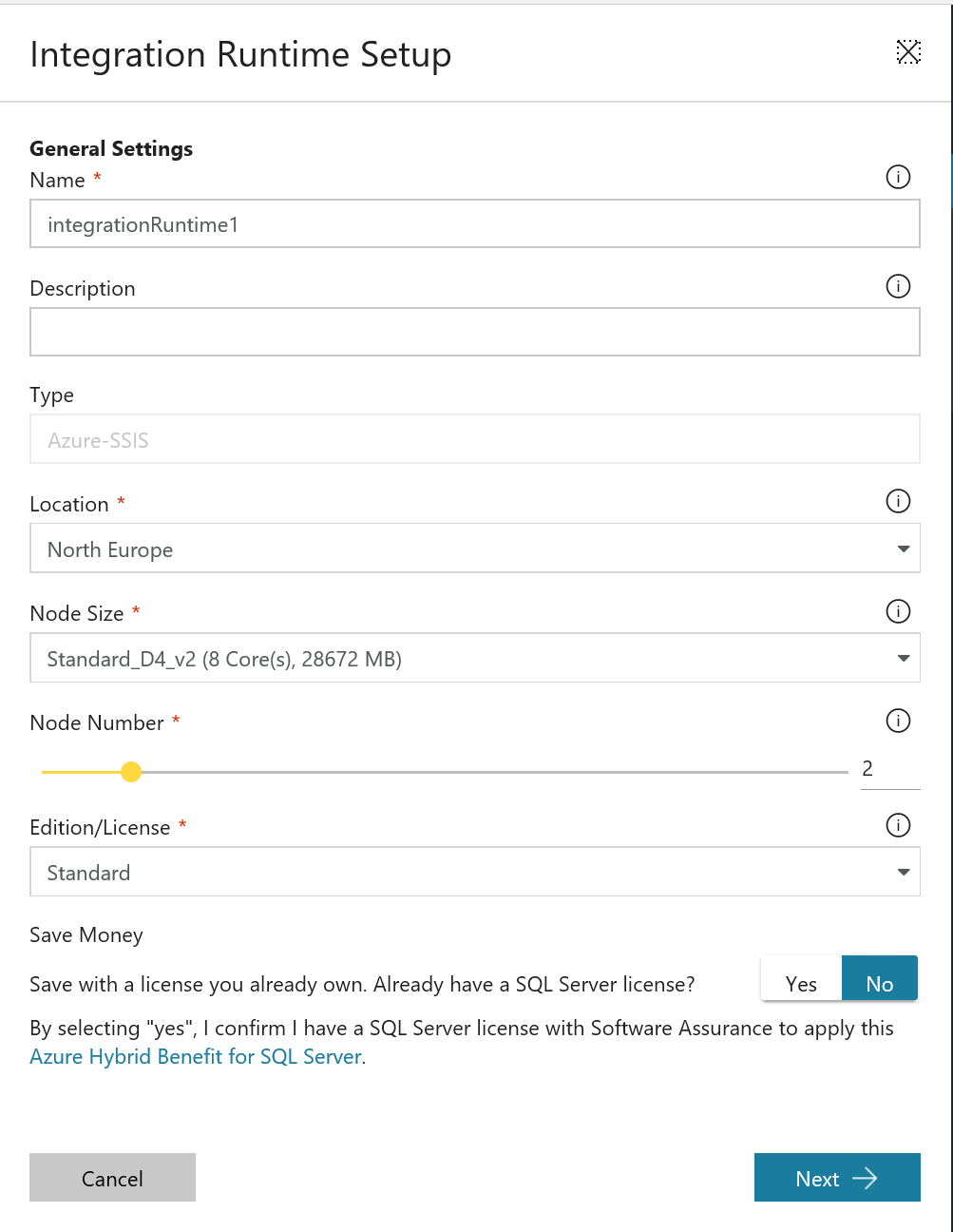

- Some support for the deployment of DTSX packages, but with incredibly complex deployment options.

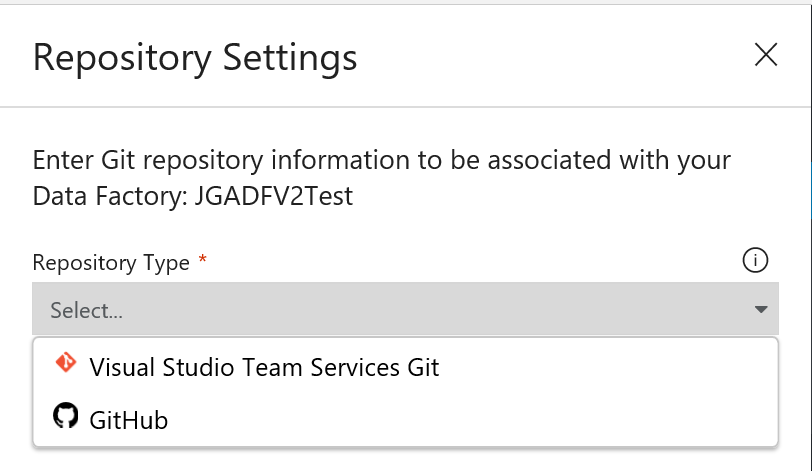

- Little or no support for Integrated Development Environment (IDE’s), such as Visual Studio, or other typical DevOps scenarios.

In what seems like a short space of time, the product has come on leaps and bounds to address these limitations:

The final one is a particularly nice touch, and means that you can straightforwardly incorporate Azure Data Factory V2 as part of your DevOps strategy with minimal effort - an ARM Resource Template deployment, containing all of your data factory components, will get your resources deployed out to new environments with ease. What’s even better is that this deployment template is intelligent enough not to recreate existing resources and only update Data Factory resources that have changed. Very nice.

Although a lot is provided for by Azure Data Factory V2 to assist with a typical DevOps cycle, there is one thing that the tool does not account for satisfactorily.

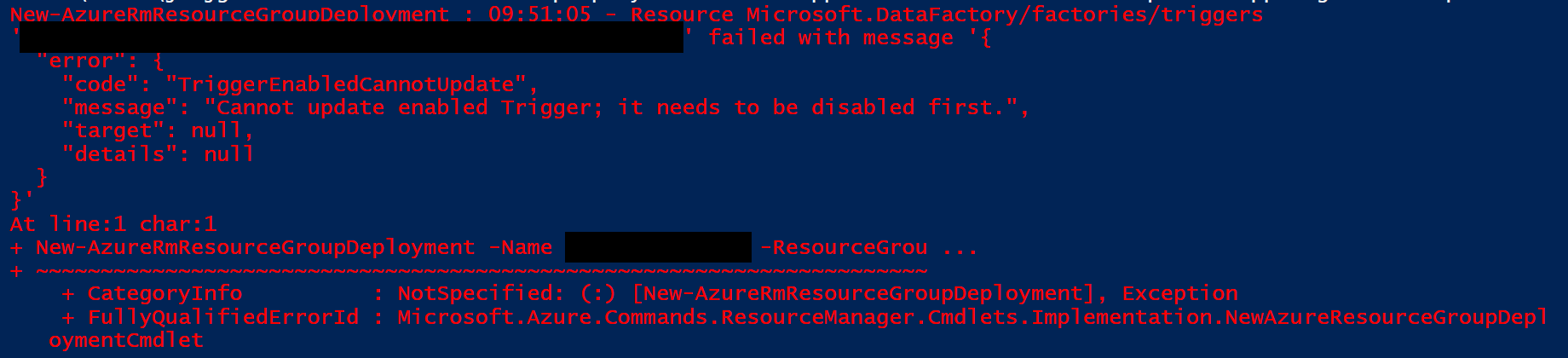

A critical aspect as part of any Azure Data Factory V2 deployment is the implementation of Triggers. These define the circumstances under which your pipelines will execute, typically either via an external event or based on a pre-defined schedule. Once activated, they effectively enter a “read-only” state, meaning that any changes made to them via a Resource Group Deployment will be blocked and the deployment will fail - as we can see below when running the New-AzureRmResourceGroupDeployment cmdlet directly from PowerShell:

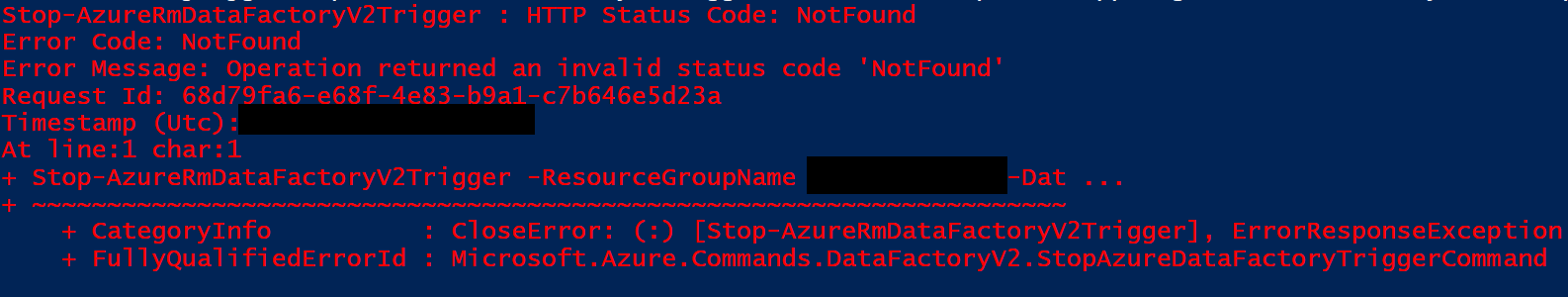

The solution is simple - stop the Trigger programmatically as part of your DevOps execution cycle via the handy Stop-AzureRmDataFactoryV2Trigger. This step involves just a single line PowerShell Cmdlet that is callable from an Azure PowerShell task. But what happens if you are deploying your Azure Data Factory V2 template for the first time?

The best (and only) resolution to get around this little niggle will be to construct a script that performs the appropriate checks on whether a Trigger exists to stop and skip over this step if it doesn’t yet exist. The following parameterised PowerShell script file will achieve these requirements by attempting to stop the Trigger called ‘MyDataFactoryTrigger’:

param($rgName, $dfName)

Try

{

Write-Host "Attempting to stop MyDataFactoryTrigger Data Factory Trigger..."

Get-AzureRmDataFactoryV2Trigger -ResourceGroupName $rgName -DataFactoryName $dfName -TriggerName 'MyDataFactoryTrigger' -ErrorAction Stop

Stop-AzureRmDataFactoryV2Trigger -ResourceGroupName $rgName -DataFactoryName $dfName -TriggerName 'MyDataFactoryTrigger' -Force

Write-Host -ForegroundColor Green "Trigger stopped successfully!"

}

Catch

{

$errorMessage = $_.Exception.Message

if($errorMessage -like '*NotFound*')

{

Write-Host -ForegroundColor Yellow "Data Factory Trigger does not exist, probably because the script is being executed for the first time. Skipping..."

}

else

{

throw "An error occured whilst retrieving the MyDataFactoryTrigger trigger."

}

}

Write-Host "Script has finished executing."

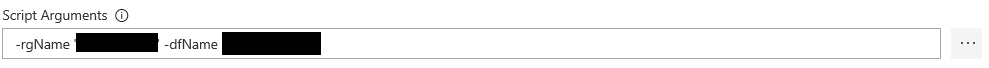

To use successfully within Azure DevOps, be sure to provide values for the parameters in the Script Arguments field:

With some nifty copy + paste action, you can accommodate the stopping of multiple Triggers as well - although if you have more than 3-4, then it may be more sensible to perform some iteration involving an array containing all of your Triggers, passed at runtime.

For completeness, you will also want to ensure that you restart the affected Triggers after any ARM Template deployment. The following PowerShell script will achieve this outcome:

param($rgName, $dfName)

Try

{

Write-Host "Attempting to start MyDataFactoryTrigger Data Factory Trigger..."

Start-AzureRmDataFactoryV2Trigger -ResourceGroupName $rgName -DataFactoryName $dfName -TriggerName 'MyDataFactoryTrigger' -Force -ErrorAction Stop

Write-Host -ForegroundColor Green "Trigger started successfully!"

}

Catch

{

throw "An error occured whilst starting the MyDataFactoryTrigger trigger."

}

Write-Host "Script has finished executing."

The Azure Data Factory V2 offering has no doubt come leaps and bounds in a short space of time…

…but you can’t shake the feeling that there is a lot that still needs to be done. The current release, granted, feels very stable and production-ready, but I think there is a whole range of enhancements that could be introduced to allow better feature parity when compared with SSIS DTSX packages. With this in place, and when taking into account the very significant cost differences between both offerings, I think it would make Azure Data Factory V2 a no-brainer option for almost every data integration scenario. The future looks very bright indeed 🙂